接着上篇《PyTorch简明教程上篇》,继续学习多层感知机,卷积神经网络和LSTMNet。

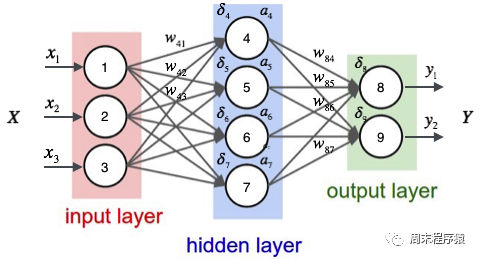

1、多层感知机

多层感知机通过在网络中加入一个或多个隐藏层来克服线性模型的限制,是一个简单的神经网络,也是深度学习的重要基础,具体图如下:

import numpy as np

import torch

from torch.autograd import Variable

from torch import optim

from data_util import load_mnist

def build_model(input_dim, output_dim):

return torch.nn.Sequential(

torch.nn.Linear(input_dim, 512, bias=False),

torch.nn.ReLU(),

torch.nn.Dropout(0.2),

torch.nn.Linear(512, 512, bias=False),

torch.nn.ReLU(),

torch.nn.Dropout(0.2),

torch.nn.Linear(512, output_dim, bias=False),

)

def train(model, loss, optimizer, x_val, y_val):

model.train()

optimizer.zero_grad()

fx = model.forward(x_val)

output = loss.forward(fx, y_val)

output.backward()

optimizer.step()

return output.item()

def predict(model, x_val):

model.eval()

output = model.forward(x_val)

return output.data.numpy().argmax(axis=1)

def main():

torch.manual_seed(42)

trX, teX, trY, teY = load_mnist(notallow=False)

trX = torch.from_numpy(trX).float()

teX = torch.from_numpy(teX).float()

trY = torch.tensor(trY)

n_examples, n_features = trX.size()

n_classes = 10

model = build_model(n_features, n_classes)

loss = torch.nn.CrossEntropyLoss(reductinotallow='mean')

optimizer = optim.Adam(model.parameters())

batch_size = 100

for i in range(100):

cost = 0.

num_batches = n_examples // batch_size

for k in range(num_batches):

start, end = k * batch_size, (k + 1) * batch_size

cost += train(model, loss, optimizer,

trX[start:end], trY[start:end])

predY = predict(model, teX)

print("Epoch %d, cost = %f, acc = %.2f%%"

% (i + 1, cost / num_batches, 100. * np.mean(predY == teY)))

if __name__ == "__main__":

main()分享说明:转发分享请注明出处。

上一篇:美国公布人工智能总统行政命令